Googlebot is Google’s web crawling bot, indexing content for the search engine. It navigates the web, discovering and updating pages within Google’s index.

Googlebot plays a critical role in search engine optimization, as it’s the primary tool Google uses to find and retrieve web pages. Understanding Googlebot’s function and patterns can help websites enhance their visibility in search results. By employing sophisticated algorithms, Googlebot efficiently identifies and visits websites, analyzing content and links.

It prioritizes new and updated content, ensuring users are presented with the most current information. As a webmaster, optimizing your site for Googlebot is essential for achieving better search engine ranking and driving organic traffic to your site. Engaging, relevant, and well-structured content aligns with Googlebot’s criteria, facilitating higher indexing and better search performance.

Credit: backlinko.com

How Googlebot works?

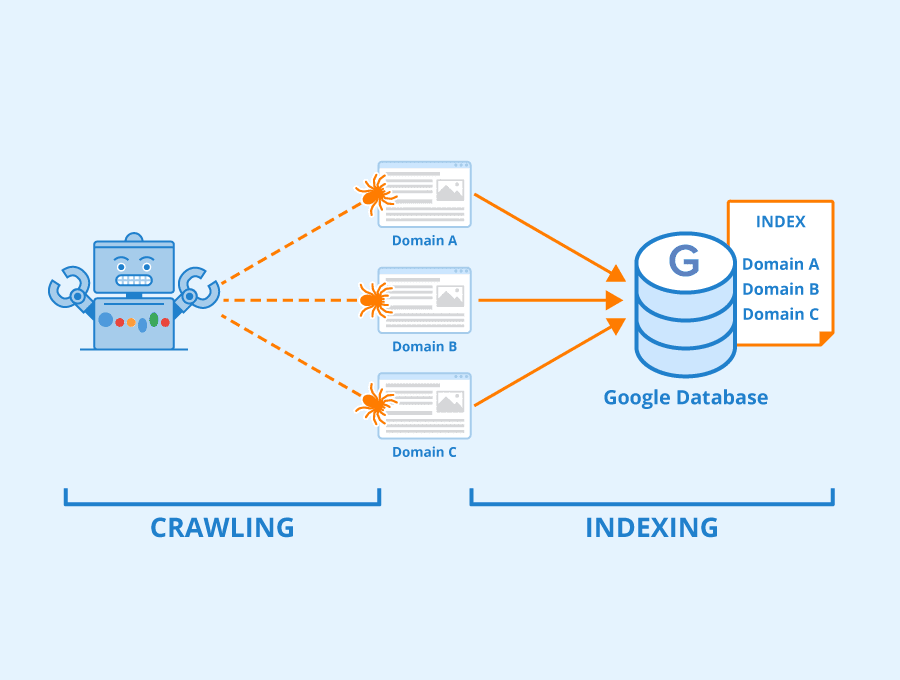

Crawling

Crawling is the first step in Googlebot’s journey across the web. Googlebot searches for new and updated content by following links. Thesitemap.xml file is a guide, leading Googlebot to the content on a website. Website owners use robots.txt to communicate with Googlebot, indicating which parts of their site should be scanned.

- Finds new web pages.

- Updates known pages.

- Uses

sitemap.xmlandrobots.txt.

| Step | Action |

|---|---|

| 1 | Process page content |

| 2 | Store in Google’s index |

- Processes JavaScript and CSS.

- Googlebot sees pages as users do.

Crawling Process of Googlebot

Discovering Pages

First, Googlebot must find your pages. It does this through sitemaps and database records. New websites, updates, and links can signal Googlebot to visit.- Seeds start the process.

- Algorithms prioritize new information.

- Changes to sites get noted for revisits.

Following Links

Next, Googlebot analyzes links on each page. Both internal and external links matter. It considers link quality and structure to discover content.- Navigates through menus and directories.

- Assesses the relevancy of links to the content.

- Values trusted and authoritative sources.

Crawl Budget

Every site has a crawl budget. This determines how often and deep Googlebot crawls. Site size and health influence the budget.| Factors Affecting Crawl Budget | Impact |

|---|---|

| Server speed | Fast servers allow more crawls. |

| Content updates | Frequent updates encourage revisits. |

| Error rates | High errors reduce crawls. |

Indexing

Storing Information

Indexing starts with storing information. Here, Googlebot records new and updated web pages for future retrieval. Think of this as Google’s digital library where every piece of content is meticulously filed away. Each web page’s data, from text to images, is stored within vast Google databases, ensuring no detail is missed.Organizing Content

After storing the data, organizing content is next. Google employs advanced algorithms to categorize and rank the stored information. Googlebot looks at factors like keywords, relevance, and site quality to organize content. This makes sure users find what they are searching for quickly and easily. Content must be useful, authoritative, and of high quality to rank well. Let’s delve deeper into how these steps help Googlebot make the internet a treasure trove of accessible information:- Crawl: The web crawler begins with known web pages and follows internal links to other pages within the site, as well as external links to other websites.

- Process: Each discovered page is processed to understand the content and meaning.

Rendering

Understanding Javascript

JavaScript brings websites to life with interactive and dynamic content. For complete rendering, Googlebot must run JavaScript. Here’s how it works:- Googlebot processes HTML and CSS first.

- Next, it executes JavaScript on the page.

- The final content appears after these steps.

Mobile-first Indexing

Most people surf the web on their phones. Google ranks mobile versions of websites first. This is mobile-first indexing. Check how Googlebot sees your mobile site for better SEO:- Use mobile-friendly design.

- Make sure your site loads fast on phones.

- Test your site with Google’s mobile-friendly test tool.

Challenges Faced By Googlebot

Duplicate Content

Duplicate content poses a significant challenge for Googlebot. It occurs when identical or very similar content appears on multiple URLs. As a result, Googlebot struggles to determine which version is most relevant to a query. This can lead to the following problems:- Confusion over which page to index and rank.

- Splitting of link equity among multiple copies.

- Potential for SEO penalties as Google frowns upon duplicated content.

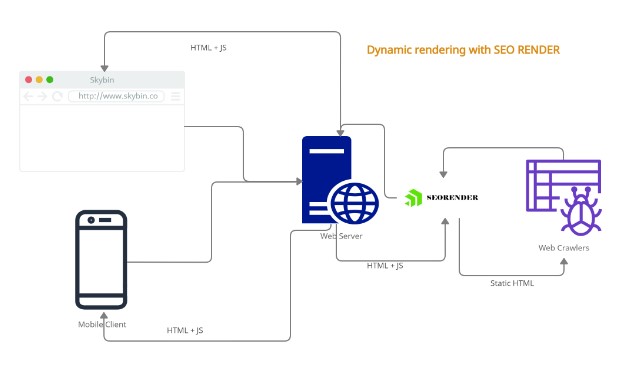

Dynamic Content

Dynamic content can also confound Googlebot. This content changes based on user behavior or preferences. Challenges include:| Challenge | Explanation |

|---|---|

| Content Rendering | Googlebot might not see content that loads dynamically, missing crucial information. |

| Heavy scripts | Long load times for JavaScript-intensive sites can hamper crawling efficiency. |

| Session IDs | Unique URLs for each visitor create crawl issues, leading to duplicate content problems. |

Impact On SEO

Website Visibility

Googlebot’s primary job is to index content for Google’s search. A website’s visibility depends on getting noticed and indexed by Googlebot. This process starts with crawling. If Googlebot can’t crawl your website efficiently, search engines might not index your content correctly. This can hurt your site’s visibility and SEO performance. Proper website structure and sitemap submission improve Googlebot’s ability to index your site. This directly impacts how easy it is for users to find you via search.- Create a sitemap: This helps Googlebot find your pages.

- Use robots.txt wisely: It guides Googlebot on what to crawl.

- Responsive design: Google prefers mobile-friendly sites.

Ranking Factors

Googlebot doesn’t just crawl websites; it also gauges their quality based on google algorithms. Various ranking factors impact a site’s position in search results.| Ranking Factor | Description | Impact on SEO |

|---|---|---|

| Content Quality | Relevant and valuable information | High impact |

| Keyword Usage | Natural inclusion of search terms | Must align with user inquiry |

| Page Speed | Quick load times | Essential for user experience |

| Backlinks | Links from reputable sites | Signals trust and authority |

| User Engagement | Interactions on the webpage | Influences relevancy signals |

Best Practices

Optimizing Crawlability

To let Googlebot discover your web pages, optimize your site’s crawlability. This means organizing your site so it’s easy for the spider to navigate.- Create a clean, clear URL structure. Make URLs descriptive and simple to understand.

- Use a robots.txt file. This tells Googlebot which parts of your site to crawl and which to ignore.

- Incorporate a sitemap. A sitemap lists all your important pages. Google uses it as a guide to your site.

Making Content Accessible

Accessible content ensures that Googlebot and users can access and understand your content. Follow these steps:- Include alt text for images. This helps Googlebot interpret what images are about.

- Ensure text is not embedded in images. Text within images is unreadable by Googlebot.

- Make your site mobile friendly. With mobile optimization, you cater to a vast number of users.

- Use header tags properly. Structure content with to tags. These help Googlebot understand content hierarchy.

Frequently Asked Questions On What Is Googlebot & How Does It Work?

What Is Googlebot And How Does It Work?

Googlebot is Google’s web crawling robot that finds and retrieves web pages, enabling them to be indexed. It navigates the web by following links, constantly collecting information on websites for Google’s search index.

What Do Google Bots Look For?

Google bots scan for relevant content, keywords, user experience signals, mobile-friendliness, and high-quality backlinks to assess and rank web pages.

How Many Google Bots Are There?

Google uses several bots, with the main ones including Googlebot for web crawling, Mobile-Friendly Test Bot for mobile usability, and AdsBot for AdWords advertisers.

How Do I Turn On Googlebot?

Googlebot is automatically activated to crawl websites. To ensure it can access your site, check your robots. txt file for permissions, submit a sitemap through Google Search Console, and confirm that your hosting server allows search engine bots.

Conclusion

Understanding Googlebot is crucial for website visibility. It’s the bridge between content and searchers. By optimizing for this web crawler, your site stands a better chance in SERPs. Keep your code clean and content fresh to ensure smooth Googlebot interactions.

Better indexing, better results – it’s that simple.

Pingback: On-Page SEO | The Complete Guide for 2024 | A1 Technovation